A current problem in Machine Learning is how to correctly implement contextualization.

Our human brain is able to avoid both false positives and false negatives in the respect of object recognition in unusual contexts; current ML systems are unable to do so and usually only avoid one type of error, while erring to the side of the other.

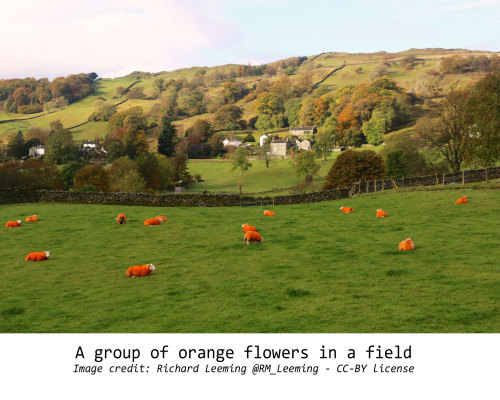

Take the example of a photoshopped picture showing orange sheep on a green field. An ML system (which hasn’t been specifically trained for this case) is likely to label the picture as “orange flowers on a green field”, as it is unable to decouple the context (the green grassy field) from the content (the sheep) and its features (being orange).

Moreover, in order to have labeled the picture as “orange flowers on a green field”, the ML system has to have seen thousands of pictures of flowers and fields. Conversely, a human would be able to correctly label the field having seen a sheep only once.

How come that humans are able to:

- Correctly label the image,

- Even if the content and the context are unexpected,

- With only a few number of observations,

- With unsupervised learning?

In the paper below, I answer these questions and propose the architecture of an AI system which would be able to perform perceptual recognition, contextualization and meaning extraction just as well as a human.